Deploy an EKS cluster with Terraform (advanced)

This guide offers a detailed tutorial for deploying an Amazon Web Services (AWS) Elastic Kubernetes Service (EKS) cluster, tailored explicitly for deploying Camunda 8 and using Terraform, a popular Infrastructure as Code (IaC) tool.

It is recommended to use this guide for building a robust and sustainable infrastructure over time. However, for a quicker trial or proof of concept, using the eksctl method may suffice.

This guide is designed to help leverage the power of Infrastructure as Code (IaC) to streamline and reproduce a cloud infrastructure setup. By walking through the essentials of setting up an Amazon EKS cluster, configuring AWS IAM permissions, and integrating a PostgreSQL database and an OpenSearch domain (as an alternative to Elasticsearch), this guide explains how to use Terraform with AWS, making it accessible even to those new to Terraform or IaC concepts. It utilizes AWS-managed services when available, providing these as an optional convenience that you can choose to use or not.

If you are completely new to Terraform and the idea of IaC, read through the Terraform IaC documentation and give their interactive quick start a try for a basic understanding.

Requirements

- An AWS account to create any resources within AWS.

- AWS CLI, a CLI tool for creating AWS resources.

- Terraform

- kubectl to interact with the cluster.

- jq to interact with some Terraform variables.

- IAM Roles for Service Accounts (IRSA) configured.

- This simplifies the setup by not relying on explicit credentials and instead creating a mapping between IAM roles and Kubernetes service account based on a trust relationship. A blog post by AWS visualizes this on a technical level.

- This allows a Kubernetes service account to temporarily impersonate an AWS IAM role to interact with AWS services like S3, RDS, or Route53 without having to supply explicit credentials.

- IRSA is recommended as an EKS best practice.

- AWS Quotas

- Ensure at least 3 Elastic IPs (one per availability zone).

- Verify quotas for VPCs, EC2 instances, and storage.

- Request increases if needed via the AWS console (guide), costs are only for resources used.

- This guide uses GNU/Bash for all the shell commands listed.

For the tool versions used, check the .tool-versions file in the repository. It contains an up-to-date list of versions that we also use for testing.

Considerations

This setup provides an essential foundation for beginning with Camunda 8, though it's not tailored for optimal performance. It's a good initial step for preparing a production environment by incorporating IaC tooling.

Terraform can be opaque in the beginning. If you solely want to get an understanding for what is happening, you may try out the eksctl guide to understand what resources are created and how they interact with each other.

To try out Camunda 8 or develop against it, consider signing up for our SaaS offering. If you already have an Amazon EKS cluster, consider skipping to the Helm guide.

For the simplicity of this guide, certain best practices will be provided with links to additional documents, enabling you to explore the topic in more detail.

The following security considerations were made for ease of adoption and development and should be reassessed before deploying to production. These items were generated by Trivy and can be easily referenced using their IDs in the aqua vulnerability database.

AVD-AWS-0040 #(CRITICAL): Public cluster access is enabled.

AVD-AWS-0041 #(CRITICAL): Cluster allows access from a public CIDR: 0.0.0.0/0

AVD-AWS-0104 #(CRITICAL): Security group rule allows egress to multiple public internet addresses.

AVD-AWS-0343 #(MEDIUM): Cluster does not have Deletion Protection enabled

AVD-AWS-0178 #(MEDIUM): VPC does not have VPC Flow Logs enabled.

AVD-AWS-0038 #(MEDIUM): Control plane scheduler logging is not enabled.

AVD-AWS-0077 #(MEDIUM): Cluster instance has very low backup retention period.

AVD-AWS-0133 #(LOW): Instance does not have performance insights enabled.

Reference architectures are not intended to be consumed exactly as described. The examples provided in this guide are not designed to be consumed as a Terraform module. It is recommended that you make any modifications locally, therefore the guide will mention cloning the repository.

This also makes it easy to extend and customize the codebase to fit your needs. However, it's important to note that maintaining the infrastructure is your responsibility. Camunda will update and refine the reference architecture, which may not be backward compatible with your code. You can use these updates to upgrade your customized codebase as needed.

Following this guide will incur costs on your Cloud provider account, namely for the managed Kubernetes service, running Kubernetes nodes in EC2, Elastic Block Storage (EBS), and Route53. More information can be found on AWS and their pricing calculator as the total cost varies per region.

Variants

We support two variants of this architecture:

-

The first, standard installation, utilizes a username and password connection for the Camunda components (or simply relies on network isolation for certain components). This option is straightforward and easier to implement, making it ideal for environments where simplicity and rapid deployment are priorities, or where network isolation provides sufficient security.

-

The second variant, IRSA (IAM Roles for Service Accounts), uses service accounts to perform authentication with IAM policies. This approach offers stronger security and better integration with AWS services, as it eliminates the need to manage credentials manually. It is especially beneficial in environments with strict security requirements, where fine-grained access control and dynamic role-based access are essential.

How to choose

- If you prefer a simpler setup with basic authentication or network isolation, and your security needs are moderate, the standard installation is a suitable choice.

- If you require enhanced security, dynamic role-based access management, and want to leverage AWS’s identity services for fine-grained control, the IRSA variant is the better option.

Both can be set up with or without a Domain (ingress).

Outcome

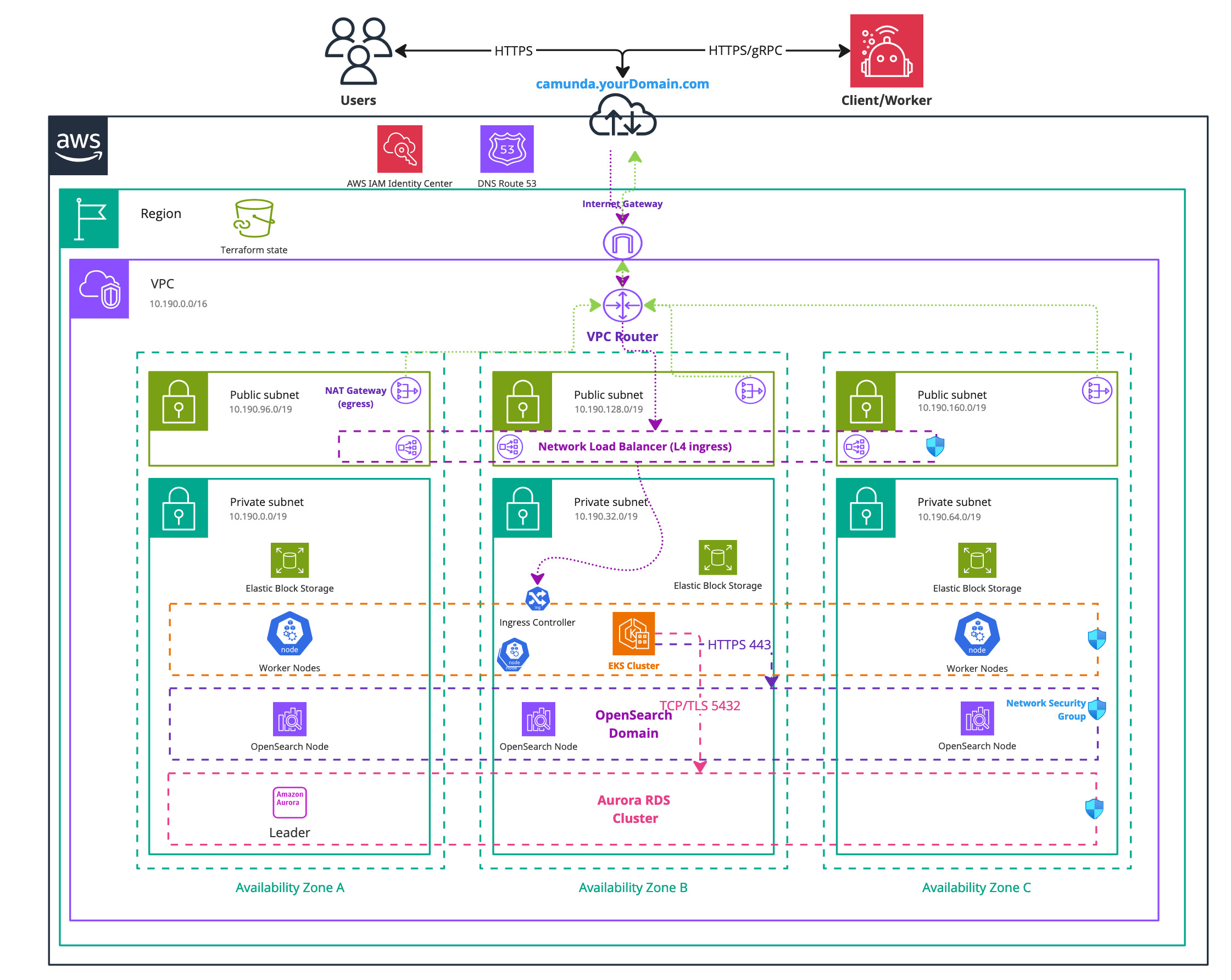

Infrastructure diagram for a single region EKS setup (click on the image to open the PDF version)

Following this tutorial and steps will result in:

- An Amazon EKS Kubernetes cluster running with four nodes ready for Camunda 8 installation.

- The EBS CSI driver is installed and configured, which is used by the Camunda 8 Helm chart to create persistent volumes.

- A managed Aurora PostgreSQL 15.x instance to be used by the Camunda platform.

- A managed OpenSearch domain created and configured for use with the Camunda platform.

- (optional) IAM Roles for Service Accounts (IRSA) configured.

- This simplifies the setup by not relying on explicit credentials, but instead allows creating a mapping between IAM roles and Kubernetes service accounts based on a trust relationship. A blog post by AWS visualizes this on a technical level.

- This allows a Kubernetes service account to temporarily impersonate an AWS IAM role to interact with AWS services like S3, RDS, or Route53 without supplying explicit credentials.

1. Configure AWS and initialize Terraform

Obtain a copy of the reference architecture

The first step is to download a copy of the reference architecture from the GitHub repository. This material will be used throughout the rest of this documentation. The reference architectures are versioned using the same Camunda versions (stable/8.x).

The provided reference architecture repository allows you to directly reuse and extend the existing Terraform example base. This sample implementation is flexible to extend to your own needs without the potential limitations of a Terraform module maintained by a third party.

- Standard

- IRSA

loading...

loading...

With the reference architecture copied, you can proceed with the remaining steps outlined in this documentation. Ensure that you are in the correct directory before continuing with further instructions.

Terraform prerequisites

To manage the infrastructure for Camunda 8 on AWS using Terraform, we need to set up Terraform's backend to store the state file remotely in an S3 bucket. This ensures secure and persistent storage of the state file.

Advanced users may want to handle this part differently and use a different backend. The backend setup provided is an example for new users.

Set up AWS authentication

The AWS Terraform provider is required to create resources in AWS. Before you can use the provider, you must authenticate it using your AWS credentials.

A user who creates resources in AWS will always retain administrative access to those resources, including any Kubernetes clusters created. It is recommended to create a dedicated AWS IAM user for Terraform purposes, ensuring that the resources are managed and owned by that user.

You can further change the region and other preferences and explore different authentication methods:

-

For development or testing purposes you can use the AWS CLI. If you have configured your AWS CLI, Terraform will automatically detect and use those credentials. To configure the AWS CLI:

aws configureEnter your

AWS_ACCESS_KEY_ID,AWS_SECRET_ACCESS_KEY, region, and output format. These can be retrieved from the AWS Console. -

For production environments, we recommend the use of a dedicated IAM user. Create access keys for the new IAM user via the console, and export them as

AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEY.

Create an S3 bucket for Terraform state management

Before setting up Terraform, you need to create an S3 bucket that will store the state file. This is important for collaboration and to prevent issues like state file corruption.

To start, set the region as an environment variable upfront to avoid repeating it in each command:

export AWS_REGION=<your-region>

Replace <your-region> with your chosen AWS region (for example, eu-central-1).

Now, follow these steps to create the S3 bucket with versioning enabled:

-

Open your terminal and ensure the AWS CLI is installed and configured.

-

Run the following command to create an S3 bucket for storing your Terraform state. Make sure to use a unique bucket name and set the

AWS_REGIONenvironment variable beforehand:aws/common/procedure/s3-bucket/s3-bucket-creation.shloading... -

Enable versioning on the S3 bucket to track changes and protect the state file from accidental deletions or overwrites:

aws/common/procedure/s3-bucket/s3-bucket-versioning.shloading... -

Secure the bucket by blocking public access:

aws/common/procedure/s3-bucket/s3-bucket-private.shloading... -

Verify versioning is enabled on the bucket:

aws/common/procedure/s3-bucket/s3-bucket-verify.shloading...

This S3 bucket will now securely store your Terraform state files with versioning enabled.

Initialize Terraform

Once your authentication is set up, you can initialize your Terraform project. The previous steps configured a dedicated S3 Bucket (S3_TF_BUCKET_NAME) to store your state, and the following creates a bucket key that will be used by your configuration.

Configure the backend and download the necessary provider plugins:

loading...

Terraform will connect to the S3 bucket to manage the state file, ensuring remote and persistent storage.

EKS cluster module setup

This module establishes the foundational configuration for AWS access and Terraform.

We will utilize Terraform modules, which allow us to abstract resources into reusable components, streamlining our infrastructure management and following Terraform best practices.

The reference architecture comes with an example module implementation of the EKS cluster and offers a robust starting point for deploying an EKS cluster. It is highly recommended to review this module prior to implementation to understand its structure and capabilities.

The module will be locally sourced, meaning within your cloned repository you can do any adjustment required for the EKS module and it will directly affect the setup.

Set up the EKS cluster module

-

The

cluster.tfin your chosen reference is containing a basic setup referencing a local Terraform module with the cluster basics. The following shows said file, which you can within your cloned setup adjust to your needs.- Standard

- IRSA

aws/kubernetes/eks-single-region/cluster.tfloading...aws/kubernetes/eks-single-region-irsa/cluster.tfloading... -

Configure user access to the cluster. By default, the user who creates the Amazon EKS cluster has administrative access.

Grant cluster access to other users

If you want to grant access to other users, you can configure this by using the

access_entriesinput.Amazon EKS access management is divided into two distinct layers:

-

The first layer involves AWS IAM permissions, which allow basic Amazon EKS functionalities such as interacting with the Amazon EKS UI and generating EKS access through the AWS CLI. The module handles this part for you by creating the necessary IAM roles and policies.

-

The second layer controls cluster access within Kubernetes, defining the user's permissions inside the cluster (for example, policy association). This can be configured directly through the module's

access_entriesinput.

To manage user access, use the

access_entriesconfiguration:access_entries = {

example = {

kubernetes_groups = []

principal_arn = "<arn>"

policy_associations = {

example = {

policy_arn = "arn:aws:eks::aws:cluster-access-policy/AmazonEKSViewPolicy"

access_scope = {

namespaces = ["default"]

type = "namespace"

}

}

}

}

}In this configuration:

- Replace

principal_arnwith the ARN of the IAM user or role. - Use

policy_associationsto define policies for fine-grained access control.

For a full list of available policies, refer to the AWS EKS Access Policies documentation.

-

-

Customize the cluster setup. The module offers various input options that allow you to further customize the cluster configuration. For a comprehensive list of available options and detailed usage instructions, refer to the EKS module documentation.

PostgreSQL module setup

If you don't want to use this module, you can skip this section. However, you may need to adjust the remaining instructions to remove references to this module.

If you choose not to use this module, you must either provide a managed PostgreSQL service or use the internal deployment by the Camunda Helm chart in Kubernetes.

Additionally, you must delete the db.tf file within your chosen reference as it will otherwise create the resources.

We separated the cluster and PostgreSQL modules to offer you more customization options.

Set up the Aurora PostgreSQL module

-

The

db.tfin your chosen reference is containing a basic Aurora PostgreSQL setup referencing a local Terraform module. The following shows said file, which you can within your cloned setup adjust to your needs.- Standard

- IRSA

aws/kubernetes/eks-single-region/db.tfloading...In addition to using standard username and password authentication, you can opt to use IRSA (IAM Roles for Service Accounts) for secure, role-based access to your Aurora database. This method allows your EKS workloads to assume IAM roles without needing to manage AWS credentials directly.

noteUsing IRSA is optional. If preferred, you can continue using traditional password-based authentication for database access.

If you choose to use IRSA, you’ll need to take note of the IAM role created for Aurora and the AWS Account ID, as these will be used later to annotate the Kubernetes service account.

Aurora IRSA role and policy

The Aurora module uses outputs from the EKS cluster module to configure the IRSA role and policy. Below are the required parameters:

Here’s how to define the IAM role trust policy and access policy for Aurora:

aws/kubernetes/eks-single-region-irsa/db.tfloading...Once the IRSA configuration is complete, ensure you record the IAM role name (from the

iam_aurora_role_nameconfiguration), it is required to annotate the Kubernetes service account in the next step. -

Customize the Aurora cluster setup through various input options. Refer to the Aurora module documentation for more details on other customization options.

OpenSearch module setup

If you don't want to use this module, you can skip this section. However, you may need to adjust the remaining instructions to remove references to this module.

If you choose not to use this module, you'll need to either provide a managed Elasticsearch or OpenSearch service or use the internal deployment by the Camunda Helm chart in Kubernetes.

Additionally, you must delete the opensearch.tf file within your chosen reference as it will otherwise create the resources.

The OpenSearch module creates an OpenSearch domain intended for Camunda platform. OpenSearch is a powerful alternative to Elasticsearch. For more information on using OpenSearch with Camunda, refer to the Camunda documentation.

Using Amazon OpenSearch Service requires setting up a new Camunda installation. Migration from previous Camunda versions using Elasticsearch environments is currently not supported. Switching between Elasticsearch and OpenSearch, in either direction, is also not supported.

Set up the OpenSearch domain module

-

The

opensearch.tfin your chosen reference is containing a basic AWS OpenSearch setup referencing a local Terraform module. The following shows said file, which you can within your cloned setup adjust to your needs.- Standard

- IRSA

Network based securityThe standard deployment for OpenSearch relies on the first layer of security, which is the Network. While this setup allows easy access, it may expose sensitive data. To enhance security, consider implementing IAM Roles for Service Accounts (IRSA) to restrict access to the OpenSearch cluster, providing a more secure environment. For more information, see the Amazon OpenSearch Service Fine-Grained Access Control documentation.

aws/kubernetes/eks-single-region/opensearch.tfloading...In addition to standard authentication, which uses anonymous users and relies on the network for access control, you can also use IRSA (IAM Roles for Service Accounts) to securely connect to OpenSearch. IRSA enables your Kubernetes workloads to assume IAM roles without managing AWS credentials directly.

noteUsing IRSA is optional. If you prefer, you can continue using password-based access to your OpenSearch domain.

If you choose to use IRSA, you’ll need to take note of the IAM role name created for OpenSearch and the AWS Account ID, as these will be required later to annotate the Kubernetes service account.

OpenSearch IRSA role and policy

To configure IRSA for OpenSearch, the OpenSearch module uses outputs from the EKS cluster module to define the necessary IAM role and policies.

Here's an example of how to define the IAM role trust policy and access policy for OpenSearch, this configuration will deploy an OpenSearch domain with advanced security enabled:

aws/kubernetes/eks-single-region-irsa/opensearch.tfloading...Once the IRSA configuration is complete, ensure you record the IAM role name (from the

iam_opensearch_role_nameconfiguration), it is required to annotate the Kubernetes service account in the next step.As the OpenSearch domain has advanced security enabled and fine-grained access control, we will later use your provided master username (

advanced_security_master_user_name) and password (advanced_security_master_user_password) to perform the initial setup of the security component, allowing the created IRSA role to access the domain. -

Customize the cluster setup using various input options. For a full list of available parameters, see the OpenSearch module documentation.

The instance type m7i.large.search in the above example is a suggestion, and can be changed depending on your needs.

Define outputs

Terraform allows you to define outputs, which make it easier to retrieve important values generated during execution, such as database endpoints and other necessary configurations for Helm setup.

Each module definition set up in the reference contains an output definition at the end of the file. You can adjust them to your needs.

Outputs allow you to easily reference the cert-manager ARN, external-dns ARN, and the endpoints for both PostgreSQL and OpenSearch in subsequent steps or scripts, streamlining your deployment process.

Execution

We strongly recommend managing sensitive information such as the OpenSearch, Aurora username and password using a secure secrets management solution like HashiCorp Vault. For details on how to inject secrets directly into Terraform via Vault, see the Terraform Vault Secrets Injection Guide.

-

Open a terminal in the chosen reference folder where

config.tfand other.tffiles are. -

Preform a final initialization for anything changed throughout the guide:

aws/common/procedure/s3-bucket/s3-bucket-tf-init.shloading... -

Plan the configuration files:

terraform plan -out cluster.plan # describe what will be created -

After reviewing the plan, you can confirm and apply the changes.

terraform apply cluster.plan # apply the creation

Terraform will now create the Amazon EKS cluster with all the necessary configurations. The completion of this process may require approximately 20-30 minutes for each component.

2. Preparation for Camunda 8 installation

Access the created EKS cluster

You can gain access to the Amazon EKS cluster via the AWS CLI using the following command:

export CLUSTER_NAME="$(terraform console <<<local.eks_cluster_name | jq -r)"

aws eks --region "$AWS_REGION" update-kubeconfig --name "$CLUSTER_NAME" --alias "$CLUSTER_NAME"

After updating the kubeconfig, you can verify your connection to the cluster with kubectl:

kubectl get nodes

Create a namespace for Camunda:

export CAMUNDA_NAMESPACE="camunda"

kubectl create namespace "$CAMUNDA_NAMESPACE"

In the remainder of the guide, we reference the CAMUNDA_NAMESPACE variable as the namespace to create some required resources in the Kubernetes cluster, such as secrets or one-time setup jobs.

Export values for the Helm chart

After configuring and deploying your infrastructure with Terraform, follow these instructions to export key values for use in Helm charts to deploy Camunda 8 on Kubernetes.

The following commands will export the required outputs as environment variables. You may need to omit some if you have chosen not to use certain modules. These values will be necessary for deploying Camunda 8 with Helm charts:

- Standard

- IRSA

loading...

loading...

To authenticate and authorize access to PostgreSQL and OpenSearch, you do not need to export the PostgreSQL or OpenSearch passwords, IRSA will handle the authentication.

However, you will still need to export the relevant usernames and other settings to Helm.

Ensure that you use the actual values you passed to the Terraform module during the setup of PostgreSQL and OpenSearch.

Configure the database and associated access

As you now have a database, you need to create dedicated databases for each Camunda component and an associated user that have a configured access. Follow these steps to create the database users and configure access.

You can access the created database in two ways:

- Bastion host: Set up a bastion host within the same network to securely access the database.

- Pod within the EKS cluster: Deploy a pod in your EKS cluster equipped with the necessary tools to connect to the database.

The choice depends on your infrastructure setup and security preferences. In this guide, we'll use a pod within the EKS cluster to configure the database.

-

In your terminal, set the necessary environment variables that will be substituted in the setup manifest:

aws/kubernetes/eks-single-region/procedure/vars-create-db.shloading...A Kubernetes job will connect to the database and create the necessary users with the required privileges. The script installs the necessary dependencies and runs SQL commands to create the IRSA user and assign it the correct roles and privileges.

-

Create a secret that references the environment variables:

- Standard

- IRSA

aws/kubernetes/eks-single-region/procedure/create-setup-db-secret.shloading...This command creates a secret named

setup-db-secretand dynamically populates it with the values from your environment variables.After running the above command, you can verify that the secret was created successfully by using:

kubectl get secret setup-db-secret -o yaml --namespace "$CAMUNDA_NAMESPACE"This should display the secret with the base64 encoded values.

aws/kubernetes/eks-single-region-irsa/procedure/create-setup-db-secret.shloading...This command creates a secret named

setup-db-secretand dynamically populates it with the values from your environment variables.After running the above command, you can verify that the secret was created successfully by using:

kubectl get secret setup-db-secret -o yaml --namespace "$CAMUNDA_NAMESPACE"This should display the secret with the base64 encoded values.

-

Save the following manifest to a file, for example,

setup-postgres-create-db.yml.- Standard

- IRSA

aws/kubernetes/eks-single-region/setup-postgres-create-db.ymlloading...aws/kubernetes/eks-single-region-irsa/setup-postgres-create-db.ymlloading... -

Apply the manifest:

kubectl apply -f setup-postgres-create-db.yml --namespace "$CAMUNDA_NAMESPACE"Once the secret is created, the Job manifest from the previous step can consume this secret to securely access the database credentials.

-

Once the job is created, monitor its progress using:

kubectl get job/create-setup-user-db --namespace "$CAMUNDA_NAMESPACE" --watchOnce the job shows as

Completed, the users and databases will have been successfully created. -

View the logs of the job to confirm that the users were created and privileges were granted successfully:

kubectl logs job/create-setup-user-db --namespace "$CAMUNDA_NAMESPACE" -

Clean up the resources:

kubectl delete job create-setup-user-db --namespace "$CAMUNDA_NAMESPACE"

kubectl delete secret setup-db-secret --namespace "$CAMUNDA_NAMESPACE"

Running these commands cleans up both the job and the secret, ensuring that no unnecessary resources remain in the cluster.

Configure OpenSearch fine grained access control

As you now have an OpenSearch domain, you need to configure the related access for each Camunda component.

You can access the created OpenSearch domain in two ways:

- Bastion host: Set up a bastion host within the same network to securely access the OpenSearch domain.

- Pod within the EKS cluster: Alternatively, deploy a pod in your EKS cluster equipped with the necessary tools to connect to the OpenSearch domain.

The choice depends on your infrastructure setup and security preferences. In this tutorial, we'll use a pod within the EKS cluster to configure the domain.

- Standard

- IRSA

The standard installation comes already pre-configured, and no additional steps are required.

-

In your terminal, set the necessary environment variables that will be substituted in the setup manifest:

aws/kubernetes/eks-single-region-irsa/procedure/vars-create-os.shloading...A Kubernetes job will connect to the OpenSearch dommain and configure it.

-

Create a secret that references the environment variables:

aws/kubernetes/eks-single-region-irsa/procedure/create-setup-os-secret.shloading...This command creates a secret named

setup-os-secretand dynamically populates it with the values from your environment variables.After running the above command, you can verify that the secret was created successfully by using:

kubectl get secret setup-os-secret -o yaml --namespace "$CAMUNDA_NAMESPACE"This should display the secret with the base64 encoded values.

-

Save the following manifest to a file, for example,

setup-opensearch-fgac.yml.aws/kubernetes/eks-single-region-irsa/setup-opensearch-fgac.ymlloading... -

Apply the manifest:

kubectl apply -f setup-opensearch-fgac.yml --namespace "$CAMUNDA_NAMESPACE"Once the secret is created, the Job manifest from the previous step can consume this secret to securely access the OpenSearch domain credentials.

-

Once the job is created, monitor its progress using:

kubectl get job/setup-opensearch-fgac --namespace "$CAMUNDA_NAMESPACE" --watchOnce the job shows as

Completed, the OpenSearch domain is configured correctly for fine grained access control. -

View the logs of the job to confirm that the privileges were granted successfully:

kubectl logs job/setup-opensearch-fgac --namespace "$CAMUNDA_NAMESPACE" -

Clean up the resources:

kubectl delete job setup-opensearch-fgac --namespace "$CAMUNDA_NAMESPACE"

kubectl delete secret setup-os-secret --namespace "$CAMUNDA_NAMESPACE"

Running these commands will clean up both the job and the secret, ensuring that no unnecessary resources remain in the cluster.

3. Install Camunda 8 using the Helm chart

Now that you've exported the necessary values, you can proceed with installing Camunda 8 using Helm charts. Follow the guide Camunda 8 on Kubernetes for detailed instructions on deploying the platform to your Kubernetes cluster.