Install Camunda 8 on an EKS cluster

This guide provides a comprehensive walkthrough for installing the Camunda 8 Helm chart on your existing AWS Kubernetes EKS cluster. It also includes instructions for setting up optional DNS configurations and other optional AWS-managed services, such as OpenSearch and PostgreSQL.

Lastly you'll verify that the connection to your Self-Managed Camunda 8 environment is working.

Requirements

- A Kubernetes cluster; see the eksctl or Terraform guide.

- Helm

- kubectl to interact with the cluster.

- jq to interact with some variables.

- GNU envsubst to generate manifests.

- (optional) Domain name/hosted zone in Route53. This allows you to expose Camunda 8 and connect via community-supported zbctl or Camunda Modeler.

- A namespace to host the Camunda Platform.

For the tool versions used, check the .tool-versions file in the repository. It contains an up-to-date list of versions that we also use for testing.

Considerations

While this guide is primarily tailored for UNIX systems, it can also be run under Windows by utilizing the Windows Subsystem for Linux.

Multi-tenancy is disabled by default and is not covered further in this guide. If you decide to enable it, you may use the same PostgreSQL instance and add an extra database for multi-tenancy purposes.

Migration: The migration step will be disabled during the installation. For more information, refer to using Amazon OpenSearch Service.

Architecture

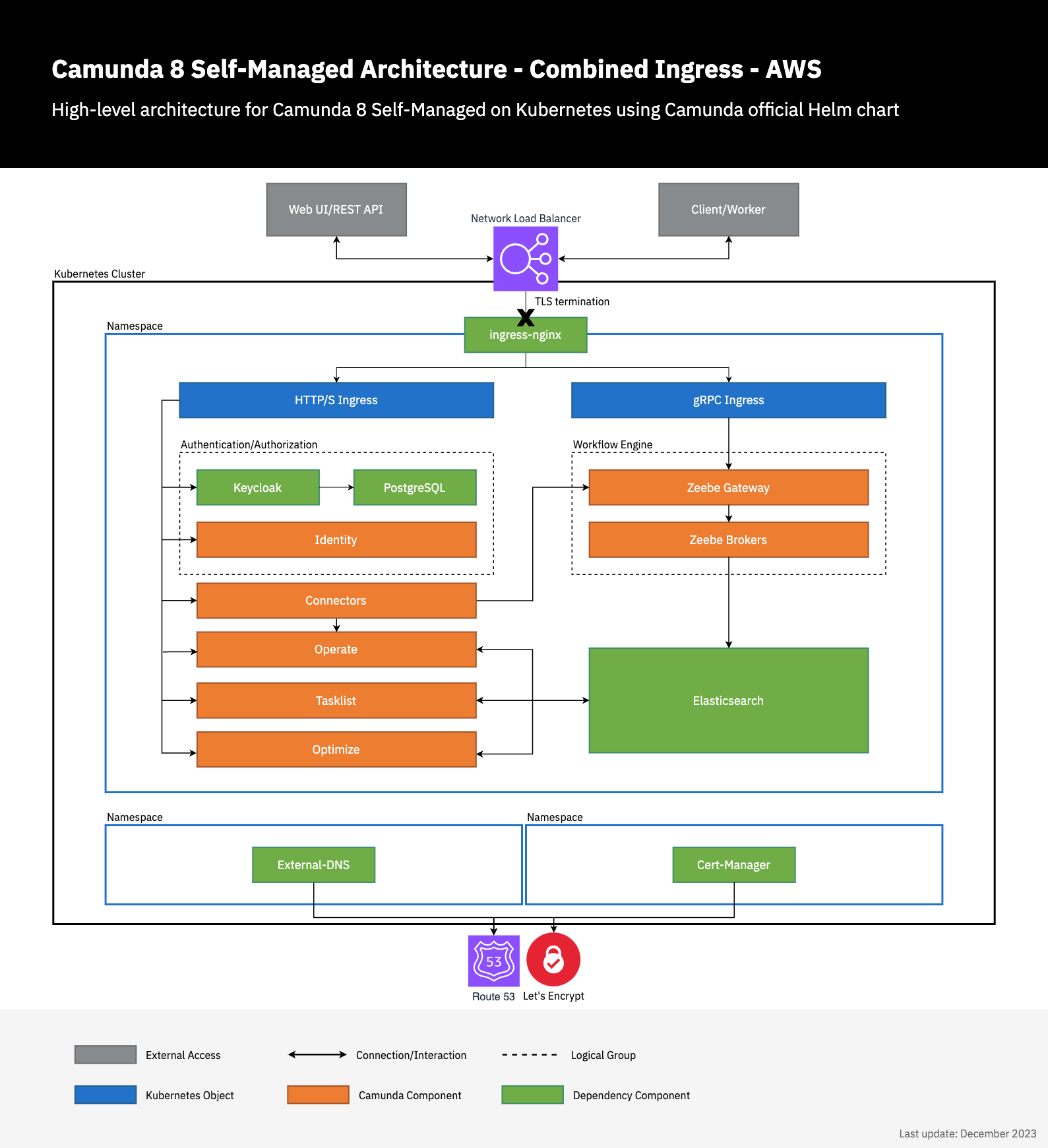

Note the existing architecture extended by deploying a Network Load Balancer with TLS termination within the ingress below.

Additionally, two components (external-dns and cert-manager) handle requesting the TLS certificate from Let's Encrypt and configuring Route53 to confirm domain ownership and update the DNS records to expose the Camunda 8 deployment.

Export environment variables

To streamline the execution of the subsequent commands, it is recommended to export multiple environment variables.

Export the AWS region and Helm chart version

The following are the required environment variables with some example values:

loading...

loading...

CAMUNDA_NAMESPACEis the Kubernetes namespace where Camunda will be installed.CAMUNDA_RELEASE_NAMEis the name of the Helm release associated with this Camunda installation.

Export database values

When using either standard authentication (network based or username and password) or IRSA authentication, specific environment variables must be set with valid values. Follow the guide for either eksctl or Terraform to set them correctly.

Verify the configuration of your environment variables by running the following loop:

- Standard authentication

- IRSA authentication

loading...

loading...

(Optional) Ingress Setup

If you do not have a domain name, external access to Camunda 8 web endpoints from outside the AWS VPC will not be possible. In this case, you may skip the DNS setup and proceed directly to deploying Camunda 8 via Helm charts.

Alternatively, you can use kubectl port-forward to access the Camunda platform without a domain or Ingress configuration. For more information, see the kubectl port-forward documentation.

Throughout the rest of this installation guide, we will refer to configurations as "With domain" or "Without domain" depending on whether the application is exposed via a domain.

In this section, we provide an optional setup guide for configuring an Ingress with TLS and DNS management, allowing you to access your application through a specified domain. If you haven't set up an Ingress, refer to the Kubernetes Ingress documentation for more details. In Kubernetes, an Ingress is an API object that manages external access to services in a cluster, typically over HTTP, and can also handle TLS encryption for secure connections.

To monitor your Ingress setup using Amazon CloudWatch, you may also find the official AWS guide on monitoring nginx workloads with CloudWatch Container Insights and Prometheus helpful. Additionally, for detailed steps on exposing Kubernetes applications with the nginx ingress controller, refer to the official AWS tutorial.

Export Values

Set the following values for your Ingress configuration:

loading...

Additionally, obtain these values by following the guide for either eksctl or Terraform, as they will be needed in later steps:

EXTERNAL_DNS_IRSA_ARNCERT_MANAGER_IRSA_ARNREGION

ingress-nginx

Ingress-nginx is an open-source Kubernetes Ingress controller that provides a way to manage external access to services within a Kubernetes cluster. It acts as a reverse proxy and load balancer, routing incoming traffic to the appropriate services based on rules defined in the Ingress resource.

The following installs ingress-nginx in the ingress-nginx namespace via Helm. For more configuration options, consult the Helm chart.

loading...

external-dns

External-dns is a Kubernetes add-on that automates the management of DNS records for external resources, such as load balancers or Ingress controllers. It monitors the Kubernetes resources and dynamically updates the DNS provider with the appropriate DNS records.

The following installs external-dns in the external-dns namespace via Helm. For more configuration options, consult the Helm chart.

Consider setting domainFilters via --set to restrict access to certain hosted zones.

If you are already running external-dns in a different cluster, ensure each instance has a unique txtOwnerId for the TXT record. Without unique identifiers, the external-dns instances will conflict and inadvertently delete existing DNS records.

In the example below, it's set to external-dns and should be changed if this identifier is already in use. Consult the documentation to learn more about DNS record ownership.

loading...

cert-manager

Cert-manager is an open-source Kubernetes add-on that automates the management and issuance of TLS certificates. It integrates with various certificate authorities (CAs) and provides a straightforward way to obtain, renew, and manage SSL/TLS certificates for your Kubernetes applications.

To simplify the installation process, it is recommended to install the cert-manager CustomResourceDefinition resources before installing the chart. This separate step allows for easy uninstallation and reinstallation of cert-manager without deleting any custom resources that have been installed.

loading...

The following installs cert-manager in the cert-manager namespace via Helm. For more configuration options, consult the Helm chart. The supplied settings also configure cert-manager to ease the certificate creation by setting a default issuer, which allows you to add a single annotation on an Ingress to request the relevant certificates.

loading...

Create a ClusterIssuer via kubectl to enable cert-manager to request certificates from Let's Encrypt:

loading...

Deploy Camunda 8 via Helm charts

For more configuration options, refer to the Helm chart documentation. Additionally, explore our existing resources on the Camunda 8 Helm chart and guides.

Depending of your installation path, you may use different settings. For easy and reproducible installations, we will use yaml files to configure the chart.

1. Create the values.yml file

Start by creating a values.yml file to store the configuration for your environment. This file will contain key-value pairs that will be substituted using envsubst. You can find a reference example of this file here:

- Standard with domain

- Standard without domain

- IRSA with domain

- IRSA without domain

The following makes use of the combined Ingress setup by deploying a single Ingress for all HTTP components and a separate Ingress for the gRPC endpoint.

The annotation kubernetes.io/tls-acme=true will be interpreted by cert-manager and automatically results in the creation of the required certificate request, easing the setup.

loading...

Publicly exposing the Zeebe Gateway without proper authorization can pose significant security risks. To avoid this, consider disabling the Ingress for the Zeebe Gateway by setting the following values to false in your configuration file:

zeebeGateway.ingress.grpc.enabledzeebeGateway.ingress.rest.enabled

By default, authorization is enabled to ensure secure access to Zeebe. Typically, only internal components need direct access to Zeebe, making it unnecessary to expose the gateway externally.

Reference the credentials in secrets

Before installing the Helm chart, create Kubernetes secrets to store the Keycloak database authentication credentials and the OpenSearch authentication credentials.

To create the secrets, run the following commands:

loading...

loading...

Reference the credentials in secrets

Before installing the Helm chart, create Kubernetes secrets to store the Keycloak database authentication credentials and the OpenSearch authentication credentials.

To create the secrets, run the following commands:

loading...

The following makes use of the combined Ingress setup by deploying a single Ingress for all HTTP components and a separate Ingress for the gRPC endpoint.

The annotation kubernetes.io/tls-acme=true will be interpreted by cert-manager and automatically results in the creation of the required certificate request, easing the setup.

loading...

Publicly exposing the Zeebe Gateway without proper authorization can pose significant security risks. To avoid this, consider disabling the Ingress for the Zeebe Gateway by setting the following values to false in your configuration file:

zeebeGateway.ingress.grpc.enabledzeebeGateway.ingress.rest.enabled

By default, authorization is enabled to ensure secure access to Zeebe. Typically, only internal components need direct access to Zeebe, making it unnecessary to expose the gateway externally.

loading...

2. Configure your deployment

Enable Enterprise components

Some components are not enabled by default in this deployment. For more information on how to configure and enable these components, refer to configuring Web Modeler, Console, and connectors.

Use internal Elasticsearch instead of the managed OpenSearch

If you do not wish to use a managed OpenSearch service, you can opt to use the internal Elasticsearch deployment. This configuration disables OpenSearch and enables the internal Kubernetes Elasticsearch deployment:

Show configuration changes to disable external OpenSearch usage

global:

elasticsearch:

enabled: true

opensearch:

enabled: false

elasticsearch:

enabled: true

Use internal PostgreSQL instead of the managed Aurora

If you prefer not to use an external PostgreSQL service, you can switch to the internal PostgreSQL deployment. In this case, you will need to configure the Helm chart as follows and remove certain configurations related to the external database and service account:

Show configuration changes to disable external database usage

identityKeycloak:

postgresql:

enabled: true

# Remove external database configuration

# externalDatabase:

# ...

# Remove service account and annotations

# serviceAccount:

# ...

# Remove extra environment variables for external database driver

# extraEnvVars:

# ...

postgresql:

enabled: true

webModeler:

# Remove this part

# restapi:

# externalDatabase:

# url: jdbc:aws-wrapper:postgresql://${DB_HOST}:5432/${DB_WEBMODELER_NAME}

# user: ${DB_WEBMODELER_USERNAME}

# existingSecret: webmodeler-postgres-secret

# existingSecretPasswordKey: password

identity:

# Remove this part

# externalDatabase:

# enabled: true

# host: ${DB_HOST}

# port: 5432

# username: ${DB_IDENTITY_USERNAME}

# database: ${DB_IDENTITY_NAME}

# existingSecret: identity-postgres-secret

# existingSecretPasswordKey: password

Fill your deployment with actual values

Once you've prepared the values.yml file, run the following envsubst command to substitute the environment variables with their actual values:

loading...

Next, store various passwords in a Kubernetes secret, which will be used by the Helm chart. Below is an example of how to set up the required secret. You can use openssl to generate random secrets and store them in environment variables:

loading...

Use these environment variables in the kubectl command to create the secret.

- The

smtp-passwordshould be replaced with the appropriate external value (see how it's used by Web Modeler).

loading...

3. Install Camunda 8 using Helm

Now that the generated-values.yml is ready, you can install Camunda 8 using Helm. Run the following command:

loading...

This command:

- Installs (or upgrades) the Camunda platform using the Helm chart.

- Substitutes the appropriate version using the

$CAMUNDA_HELM_CHART_VERSIONenvironment variable. - Applies the configuration from

generated-values.yml.

This guide uses helm upgrade --install as it runs install on initial deployment and upgrades future usage. This may make it easier for future Camunda 8 Helm upgrades or any other component upgrades.

You can track the progress of the installation using the following command:

loading...

Understand how each component interacts with IRSA

Web Modeler

As the Web Modeler REST API uses PostgreSQL, configure the restapi to use IRSA with Amazon Aurora PostgreSQL. Check the Web Modeler database configuration for more details.

Web Modeler already comes fitted with the aws-advanced-jdbc-wrapper within the Docker image.

Keycloak

Only available from v21+IAM Roles for Service Accounts can only be implemented with Keycloak 21+. This may require you to adjust the version used in the Camunda Helm chart.

From Keycloak versions 21+, the default JDBC driver can be overwritten, allowing use of a custom wrapper like the aws-advanced-jdbc-wrapper to utilize the features of IRSA. This is a wrapper around the default JDBC driver, but takes care of signing the requests.

The official Keycloak documentation also provides detailed instructions for utilizing Amazon Aurora PostgreSQL.

A custom Keycloak container image containing necessary configurations is accessible on Docker Hub at camunda/keycloak. This image, built upon the base image bitnami/keycloak, incorporates the required wrapper for seamless integration.

Container image sources

The sources of the Camunda Keycloak images can be found on GitHub. In this repository, the aws-advanced-jdbc-wrapper is assembled in the Dockerfile.

Maintenance of these images is based on the upstream Bitnami Keycloak images, ensuring they are always up-to-date with the latest Keycloak releases. The lifecycle details for Keycloak can be found on endoflife.date.

Keycloak image configuration

Bitnami Keycloak container image configuration is available at hub.docker.com/bitnami/keycloak.

Identity

Identity uses PostgreSQL, and identity is configured to use IRSA with Amazon Aurora PostgreSQL. Check the Identity database configuration for more details. Identity includes the aws-advanced-jdbc-wrapper within the Docker image.

Amazon OpenSearch Service

Internal database configuration

The default setup is sufficient for Amazon OpenSearch Service clusters without fine-grained access control.

Fine-grained access control adds another layer of security to OpenSearch, requiring you to add a mapping between the IAM role and the internal OpenSearch role. Visit the AWS documentation on fine-grained access control.

There are different ways to configure the mapping within Amazon OpenSearch Service:

-

Via a Terraform module in case your OpenSearch instance is exposed.

-

Via the OpenSearch dashboard.

-

Via the REST API. To authorize the IAM role in OpenSearch for access, follow these steps:

Use the following curl command to update the OpenSearch internal database and authorize the IAM role for access. Replace placeholders with your specific values:

aws/kubernetes/eks-single-region-irsa/setup-opensearch-fgac.ymlloading...

- Replace

OPENSEARCH_MASTER_USERNAME and OPENSEARCH_MASTER_PASSWORD with your OpenSearch domain admin credentials.

- Replace

OPENSEARCH_HOST with your OpenSearch endpoint URL.

- Replace

OPENSEARCH_ROLE_ARN with the IAM role name created by Terraform, which is output by the opensearch_role module.

Security of basic auth usageThis example uses basic authentication (username and password), which may not be the best practice for all scenarios, especially if fine-grained access control is enabled. The endpoint used in this example is not exposed by default, so consult your OpenSearch documentation for specifics on enabling and securing this endpoint.

Ensure that the iam_role_arn of the previously created opensearch_role is assigned to an internal role within Amazon OpenSearch Service. For example, all_access on the Amazon OpenSearch Service side is a good candidate, or if required, extra roles can be created with more restrictive access.

restapi to use IRSA with Amazon Aurora PostgreSQL. Check the Web Modeler database configuration for more details.

Web Modeler already comes fitted with the aws-advanced-jdbc-wrapper within the Docker image.IAM Roles for Service Accounts can only be implemented with Keycloak 21+. This may require you to adjust the version used in the Camunda Helm chart.

Dockerfile.identity is configured to use IRSA with Amazon Aurora PostgreSQL. Check the Identity database configuration for more details. Identity includes the aws-advanced-jdbc-wrapper within the Docker image.Via a Terraform module in case your OpenSearch instance is exposed.

Via the OpenSearch dashboard.

Via the REST API. To authorize the IAM role in OpenSearch for access, follow these steps:

Use the following curl command to update the OpenSearch internal database and authorize the IAM role for access. Replace placeholders with your specific values:

loading...

- Replace

OPENSEARCH_MASTER_USERNAMEandOPENSEARCH_MASTER_PASSWORDwith your OpenSearch domain admin credentials. - Replace

OPENSEARCH_HOSTwith your OpenSearch endpoint URL. - Replace

OPENSEARCH_ROLE_ARNwith the IAM role name created by Terraform, which is output by theopensearch_rolemodule.

This example uses basic authentication (username and password), which may not be the best practice for all scenarios, especially if fine-grained access control is enabled. The endpoint used in this example is not exposed by default, so consult your OpenSearch documentation for specifics on enabling and securing this endpoint.

iam_role_arn of the previously created opensearch_role is assigned to an internal role within Amazon OpenSearch Service. For example, all_access on the Amazon OpenSearch Service side is a good candidate, or if required, extra roles can be created with more restrictive access.Verify connectivity to Camunda 8

First, we need an OAuth client to be able to connect to the Camunda 8 cluster.

Generate an M2M token using Identity

Generate an M2M token by following the steps outlined in the Identity getting started guide, along with the incorporating applications documentation.

Below is a summary of the necessary instructions:

- With domain

- Without domain

- Open Identity in your browser at

https://${DOMAIN_NAME}/identity. You will be redirected to Keycloak and prompted to log in with a username and password. - Use

demoas both the username and password. - Select Add application and select M2M as the type. Assign a name like "test."

- Select the newly created application. Then, select Access to APIs > Assign permissions, and select the Core API with "read" and "write" permission.

- Retrieve the

client-idandclient-secretvalues from the application details

export ZEEBE_CLIENT_ID='client-id' # retrieve the value from the identity page of your created m2m application

export ZEEBE_CLIENT_SECRET='client-secret' # retrieve the value from the identity page of your created m2m application

Identity and Keycloak must be port-forwarded to be able to connect to the cluster.

kubectl port-forward "services/$CAMUNDA_RELEASE_NAME-identity" 8080:80 --namespace "$CAMUNDA_NAMESPACE"

kubectl port-forward "services/$CAMUNDA_RELEASE_NAME-keycloak" 18080:80 --namespace "$CAMUNDA_NAMESPACE"

- Open Identity in your browser at

http://localhost:8080. You will be redirected to Keycloak and prompted to log in with a username and password. - Use

demoas both the username and password. - Select Add application and select M2M as the type. Assign a name like "test."

- Select the newly created application. Then, select Access to APIs > Assign permissions, and select the Core API with "read" and "write" permission.

- Retrieve the

client-idandclient-secretvalues from the application details

export ZEEBE_CLIENT_ID='client-id' # retrieve the value from the identity page of your created m2m application

export ZEEBE_CLIENT_SECRET='client-secret' # retrieve the value from the identity page of your created m2m application

To access the other services and their UIs, port-forward those Components as well:

Operate:

> kubectl port-forward "svc/$CAMUNDA_RELEASE_NAME-operate" 8081:80 --namespace "$CAMUNDA_NAMESPACE"

Tasklist:

> kubectl port-forward "svc/$CAMUNDA_RELEASE_NAME-tasklist" 8082:80 --namespace "$CAMUNDA_NAMESPACE"

Optimize:

> kubectl port-forward "svc/$CAMUNDA_RELEASE_NAME-optimize" 8083:80 --namespace "$CAMUNDA_NAMESPACE"

Connectors:

> kubectl port-forward "svc/$CAMUNDA_RELEASE_NAME-connectors" 8086:8080 --namespace "$CAMUNDA_NAMESPACE"

WebModeler:

> kubectl port-forward "svc/$CAMUNDA_RELEASE_NAME-web-modeler-webapp" 8084:80 --namespace "$CAMUNDA_NAMESPACE"

Console:

> kubectl port-forward "svc/$CAMUNDA_RELEASE_NAME-console" 8085:80 --namespace "$CAMUNDA_NAMESPACE"

Operate:

> kubectl port-forward "svc/$CAMUNDA_RELEASE_NAME-operate" 8081:80 --namespace "$CAMUNDA_NAMESPACE"

Tasklist:

> kubectl port-forward "svc/$CAMUNDA_RELEASE_NAME-tasklist" 8082:80 --namespace "$CAMUNDA_NAMESPACE"

Optimize:

> kubectl port-forward "svc/$CAMUNDA_RELEASE_NAME-optimize" 8083:80 --namespace "$CAMUNDA_NAMESPACE"

Connectors:

> kubectl port-forward "svc/$CAMUNDA_RELEASE_NAME-connectors" 8086:8080 --namespace "$CAMUNDA_NAMESPACE"

WebModeler:

> kubectl port-forward "svc/$CAMUNDA_RELEASE_NAME-web-modeler-webapp" 8084:80 --namespace "$CAMUNDA_NAMESPACE"

Console:

> kubectl port-forward "svc/$CAMUNDA_RELEASE_NAME-console" 8085:80 --namespace "$CAMUNDA_NAMESPACE"

Use the token

- REST API

- Desktop Modeler

For a detailed guide on generating and using a token, please conduct the relevant documentation on authenticating with the Camunda 8 REST API.

- With domain

- Without domain

Export the following environment variables:

loading...

This requires to port-forward the Zeebe Gateway to be able to connect to the cluster.

kubectl port-forward "services/$CAMUNDA_RELEASE_NAME-zeebe-gateway" 8080:8080 --namespace "$CAMUNDA_NAMESPACE"

Export the following environment variables:

loading...

Generate a temporary token to access the Camunda 8 REST API, then capture the value of the access_token property and store it as your token. Use the stored token (referred to as TOKEN in this case) to interact with the Camunda 8 REST API and display the cluster topology:

loading...

...and results in the following output:Example output

loading...

Follow our existing Modeler guide on deploying a diagram. Below are the helper values required to be filled in Modeler:

- With domain

- Without domain

The following values are required for the OAuth authentication:

- Cluster endpoint:

https://zeebe.$DOMAIN_NAME, replacing$DOMAIN_NAMEwith your domain - Client ID: Retrieve the client ID value from the identity page of your created M2M application

- Client Secret: Retrieve the client secret value from the Identity page of your created M2M application

- OAuth Token URL:

https://$DOMAIN_NAME/auth/realms/camunda-platform/protocol/openid-connect/token, replacing$DOMAIN_NAMEwith your domain - Audience:

zeebe-api, the default for Camunda 8 Self-Managed

This requires port-forwarding the Zeebe Gateway to be able to connect to the cluster:

kubectl port-forward "services/$CAMUNDA_RELEASE_NAME-zeebe-gateway" 26500:26500 --namespace "$CAMUNDA_NAMESPACE"

The following values are required for OAuth authentication:

- Cluster endpoint:

http://localhost:26500 - Client ID: Retrieve the client ID value from the identity page of your created M2M application

- Client Secret: Retrieve the client secret value from the Identity page of your created M2M application

- OAuth Token URL:

http://localhost:18080/auth/realms/camunda-platform/protocol/openid-connect/token - Audience:

zeebe-api, the default for Camunda 8 Self-Managed

Test the installation with payment example application

To test your installation with the deployment of a sample application, refer to the installing payment example guide.

Advanced topics

The following are some advanced configuration topics to consider for your cluster:

To get more familiar with our product stack, visit the following topics: