Update 8.7 to 8.8

The following sections explain which adjustments must be made to migrate from Camunda 8.7.x to 8.8.x.

Camunda Exporter and harmonized data model

The following Camunda 8.7 to 8.8 update guide covers changes related to the new harmonized data model, and the newly introduced Camunda Exporter. For additional details about the introduced exporter and related changes, see the 8.8 announcements.

With the Camunda Exporter introduction, Camunda is compatible with Elasticsearch 8.16+ and no longer supports older Elasticsearch versions. See supported environments.

Ensure the exporter/import backlog is small

For data from 8.7 to be imported, the importers must run first and complete before the Camunda Exporter can start.

The importer reads data from existing Zeebe Elasticsearch indices, and when it detects data from newer versions, it marks itself as complete. The Camunda Exporter then starts exporting only when the importer is done.

To reduce the potential of building a backlog, the user needs to make sure to drain the backlog queue as much as possible before the update.

Otherwise, the backlog can increase and cause web application data to become out of date. Zeebe will be not able to clean up data, potentially running out of disc space. This will produce downtime.

The backlog can be validated via the following Prometheus metrics:

operate_import_time_seconds_sumoperate_import_time_seconds_count

For example, the following query shows the import latency. If the result is in a range of seconds (ideally below 10 seconds), it is fine to update.

sum(rate(operate_import_time_seconds_sum{namespace=~"$namespace", partition=~"$partition", pod=~"$pod"}[$__rate_interval]))

/ sum(rate(operate_import_time_seconds_count{namespace=~"$namespace", partition=~"$partition", pod=~"$pod"}[$__rate_interval]))

Data migration

To enable Tasklist to utilize the new harmonized indices, Camunda 8.8 provides a migration application to enhance existing process models. This migration application runs asynchronously to the other applications until the importers are done, and ensures that all processes created in 8.7 are migrated and enhanced.

The migration application can be found in the camunda/bin/process-migration directory. It can be run locally, against the same Elasticsearch or OpenSearch instance, separate/standalone or together with the rest of the Camunda 8 application.

The migration application expects the following configuration:

camunda:

migration:

process:

# How many processes are migrated at once

batchSize: 5

# The timeout/count down after importer has finished before the migration app should stop

# Default PT1M

importerFinishedTimeout: PT1M

# Retry properties, exponential backoff

retry:

# How many times do we retry failing operations until we stop the migration process

maxRetries: 5

# The minimum retry delay applied to backoff

# Default PT1S

minRetryDelay: PT10S

# The maximum retry delay applied to backoff

# Default PT1M

maxRetryDelay: PT1M

database:

connect:

type: elasticsearch

# URL of ES cluster

url: <ES CLUSTER CONNECTION>

# Cluster name of ES cluster

clusterName: <CLUSTER NAME>

The migration application exposes prometheus metrics (as other components) under the :9600/actuator/prometheus endpoint. This allows the user following up the migration process.

Useful metrics are:

camunda_migration_processes_migrated- Number of migrated processes so farcamunda_migration_processes_rounds- Number of migration rounds performed so farcamunda_migration_processes_round_time- Time taken to complete one migration roundcamunda_migration_processes_single_process_time- Time taken to process a single process definition

Standalone Migration (locally)

When running the application separately (locally for example), the configuration must be made public to the migration application.

For example, the location of the application.yaml can be provided for local execution:

SPRING_CONFIG_ADDITIONALLOCATION=/path/to/application.yaml ./camunda/bin/process-migration

Integration in the standalone Camunda application

When running the standalone Camunda application, the migration application can be executed inside. It can be enabled via the process-migration Spring profile.

Example Spring profile configuration:

spring:

profiles:

active: "operate,tasklist,broker,auth,process-migration"

The above configuration must be made in the related application.yaml of the standalone Camunda application.

Helm charts

When using the Camunda 8 Helm charts, the migration application will automatically deploy with the importers if the user is enabling the update path.

Turn off importers after completion

Importers are only necessary at the start of the migration. Once complete, they can be safely turned off.

To detect whether importers are done, users can validate the state in the Elasticsearch/OpenSearch index:

tasklist-import-position-8.2.0_operate-import-position-8.3.0_

If all entries show the completed property set to true, the importing is done.

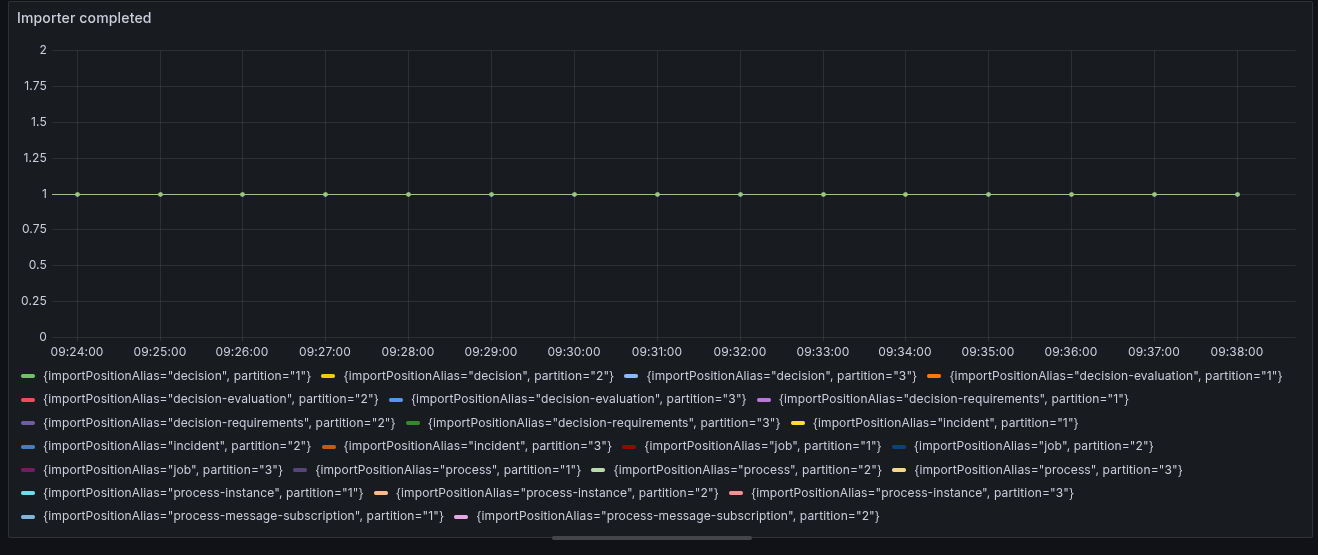

Alternatively, metrics can be validated via:

operate_import_completedtasklist_import_completed

These properties can be used in the following example query:

sum(tasklist_import_completed{namespace="$namespace"}) by (importPositionAlias)

Update any custom prefixes

This step is required if you use an installation with custom prefixes.

The new harmonized index schema introduces one common index prefix. To update to Camunda 8.8, existing indices using custom prefixes must be migrated to a common prefix, so the Camunda Exporter, REST API, and other consumers can be configured correctly.

Prefix migration

Disk usage will massively increase while the migration is ongoing (and will return to normal when complete). Ensure you have at least double the disk space free.

It is recommended that you first back up the database, and test the migration script in a test environment to ensure there are no unexpected issues.

The prefix migration involves downtime, as all data in the secondary storage needs to be migrated to a different prefix, and writes need to be stopped in between.

Complete the following steps to migrate any custom prefixes:

-

Shut down your Camunda 8.7 cluster (for example, reduce the replicas to zero). No traffic can be executed against your to-be-migrated ES/OS prefixes.

-

In the

camunda/bindirectory, theprefix-migrationscript will execute the prefix migration, which can be executed locally. Pass the desired prefix using theCAMUNDA_DATABASE_INDEXPREFIXenvironment variable.- Make sure that your configuration in

camunda/confighas set the previous custom prefixes, and your configuration is made public to the script (for example, usingSPRING_CONFIG_ADDITIONALLOCATION=/path/to/application.yaml ./camunda/bin/prefix-migration). - Alternatively, you can set the configuration via environment variables:

export CAMUNDA_OPERATE_ELASTICSEARCH_INDEX_PREFIX=old-operate-prefix; \

export CAMUNDA_TASKLIST_ELASTICSEARCH_INDEX_PREFIX=old-tasklist-prefix; \

export CAMUNDA_DATABASE_INDEXPREFIX=some-new-prefix; \

./prefix-migration - Make sure that your configuration in

With the migration finished, the following configuration changes must be made prior to updating to 8.8.

Without these configuration changes, the update to 8.8 will fail.

Given a new desired prefix of some-new-prefix:

- Set

CAMUNDA_OPERATE_ELASTICSEARCH_INDEX_PREFIXtosome-new-prefix. - Set

CAMUNDA_TASKLIST_ELASTICSEARCH_INDEX_PREFIXtosome-new-prefix. - Set

ZEEBE_BROKER_EXPORTERS_CAMUNDA_ARGS_INDEX_PREFIX(the prefix for the new Camunda exporter) tosome-new-prefix.

Single Elasticsearch/OpenSearch instance

Using more than one isolated Elasticsearch/OpenSearch instance for exported Zeebe, Operate, and Tasklist data is no longer supported. If your environment uses multiple Elasticsearch/OpenSearch instances, you must manually migrate the data from each to a single Elasticsearch/OpenSearch cluster before updating to Camunda 8.8. The migration should target Zeebe, Operate, and Tasklist indices, index templates, aliases, and ILM policies.

This step must be performed before all other migration steps.

Orchestration Cluster API

Removed deprecated OpenAPI objects

With the Camunda 8.8 release, deprecated API objects containing number keys have been removed, including the

corresponding application/vnd.camunda.api.keys.number+json content type header.

In previous releases, entity keys were transitioned from integer (int64) to string types, and deprecated

integer (int64) keys were still supported. As of the 8.8 release, support for integer (int64) keys has been removed.

To update to Camunda 8.8, API objects using integer (int64) keys must be updated to use string keys and the

application/json header.

For more information about the key attribute type change, see the 8.7 API key attributes overview.

Streamlined variable OpenAPI objects

The OpenAPI specification streamlines the objects used for variable filtering in search requests. The supported Camunda Java client handles those changes transparently. The affected API endpoints are alpha feature endpoints and thus already marked as subject to change in 8.6 and 8.7.

If you are generating a custom REST client using the specification, consider the following changes:

- The unified

VariableValueFilterPropertyreplaces theProcessInstanceVariableFilterRequestandUserTaskVariableFilterRequestin the OpenAPI spec. - For naming consistency, the

UserTaskVariableFilterreplaces theVariableUserTaskFilterRequest.

Exported records

USER_TASK records

To support user task listeners, some backward incompatible changes were necessary to the exported USER_TASK records.

assignee no longer provided in CREATED event

Previously, when a user task was activating with a specified assignee,

we appended the following events of the USER_TASK value type:

CREATINGwithassigneeproperty as providedCREATEDwithassigneeproperty as provided

The ASSIGNING and ASSIGNED events were not appended in this case.

To support the new User Task Listeners feature, the assignee value will not be filled in the CREATED event anymore.

With 8.8, the following events are now appended:

CREATINGwithassigneeproperty as providedCREATEDwithassigneealways""(empty string)ASSIGNINGwithassigneeproperty as providedASSIGNEDwithassigneeproperty as provided

ASSIGNING has become CLAIMING for CLAIM operation

When claiming a user task, we previously appended the following records of the USER_TASK value type:

CLAIMASSIGNINGASSIGNED

A new CLAIMING intent was introduced to distinguish between claiming and regular assigning.

We now append the following records when claiming a user task:

CLAIMCLAIMINGASSIGNED

The ASSIGNING event is still appended for assigning a user task.

In that case, we append the following records:

ASSIGNASSIGNINGASSIGNED

Zeebe record types

With the introduction of the Camunda Exporter, the Elasticsearch and OpenSearch Exporter no longer export all record types by default. Therefore, not all zeebe-record indices will be populated.

This only affects records exported with the 8.8 version. During the migration, leftover 8.7 records are exported as normal.

The record types that continue to be exported by default are the following:

DEPLOYMENTPROCESSPROCESS_INSTANCEVARIABLEUSER_TASKINCIDENTJOB

To export other record types, review includeEnabledRecords for Elasticsearch or OpenSearch exporter configuration.

Connectors

Email connector

Starting with the 8.8 release, angle brackets (< and >) are no longer removed from the messageId.