Amazon EC2

This guide provides a detailed walkthrough for installing the Camunda 8 single JAR on AWS EC2 instances. It focuses on managed services provided by AWS and their cloud offering. Finally, you will verify that the connection to your Self-Managed Camunda 8 environment is functioning correctly.

This guide focuses on setting up the Orchestration Cluster for Camunda 8. Web Modeler and Console are not covered in this manual deployment approach, as these components are not supported on virtual machines. To deploy Web Modeler and Console, explore the available options for Kubernetes-based deployments.

This guide is based on tools and services provided by AWS but is not limited to them. The scripts and concepts included can be adapted for other cloud providers and use cases.

When using a different cloud provider, you are responsible for configuring and maintaining the resulting infrastructure. Support is limited to questions related to this guide—not to the specific tools or services of your chosen cloud provider.

Following this guide will incur costs on your cloud provider account—primarily for EC2 instances and OpenSearch. Visit AWS and their pricing calculator for detailed cost estimates, as pricing varies by region.

You may refer to this example calculation, which can be further optimized for your specific use case.

Architecture

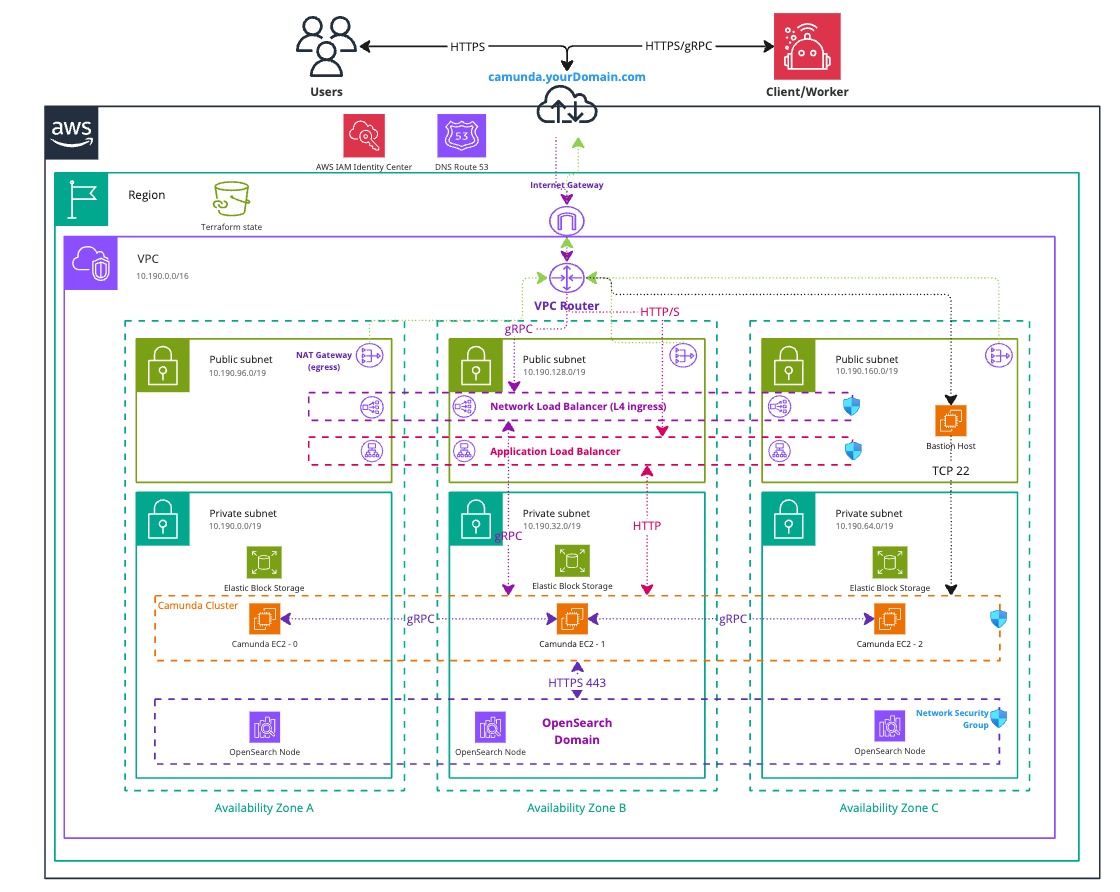

The architecture outlined below describes a standard three-node deployment, distributed across three availability zones within a single AWS region. It includes a managed OpenSearch domain deployed under the same conditions. This approach ensures high availability and redundancy in case of a zone failure.

Infrastructure diagram for a 3-node EC2 architecture (click the image to view the PDF version)

The setup includes:

- A Virtual Private Cloud (VPC), which is a logically isolated virtual network.

- A Private Subnet, which does not have direct internet access.

- Three EC2 instances running Ubuntu—one in each availability zone—host the Camunda 8 components.

- A managed OpenSearch cluster, also distributed across the three zones.

- A Public Subnet, which has internet access via an Internet Gateway.

- (Optional) An Application Load Balancer (ALB) to expose web interfaces such as Operate, Tasklist, Connectors, and the Orchestration Cluster REST API. This uses sticky sessions, as requests are otherwise distributed round-robin across EC2 instances.

- (Optional) A Network Load Balancer (NLB) to expose the gRPC endpoint of the Zeebe Gateway, if external applications need to connect.

- (Optional) A Bastion Host to allow access to private EC2 instances that are not publicly exposed.

- Alternatively, use AWS Client VPN to access the private subnet. This setup requires additional effort and certificates but can be implemented using AWS’s getting started guide.

- A NAT Gateway that allows private EC2 instances to access the internet for downloading and updating software packages. Note that this does not provide inbound access to the instances.

- A Private Subnet, which does not have direct internet access.

- Security Groups to control network traffic to and from the EC2 instances.

- An Internet Gateway to route traffic between the VPC and the internet.

Both subnet types are distributed across three availability zones in a single AWS region, supporting a high-availability architecture.

You can also run this setup using a single AWS EC2 instance. However, in the event of a zone failure, the entire environment would become unreachable.

Requirements

- An AWS account to provision resources.

- At a high level, permissions are needed for ec2, iam, elasticloadbalancing, kms, logs, and es services.

- For detailed permissions, refer to this example policy.

- Terraform (v1.7 or later)

- A Unix-based operating system with

sshandsftp- Windows may be used with Cygwin or Windows WSL, though these configurations have not been tested.

Outcome

The result is a fully functioning Camunda Orchestration Cluster deployed in a high-availability setup using AWS EC2 and a managed OpenSearch domain.

Each EC2 instance includes an additional disk, dedicated to Camunda, to separate application data from the operating system.

1. Configure AWS and initialize Terraform

We do not recommend using the following Terraform-based infrastructure as a module, since we cannot guarantee compatibility.

Instead, we suggest reusing or extending components of the Terraform example to ensure alignment with your environment.

Obtain a copy of the reference architecture

Start by downloading a copy of the reference architecture from the GitHub repository. This content will be used throughout the rest of the guide. The reference architectures are versioned according to Camunda releases (e.g., stable/8.x).

The reference architecture repository allows you to reuse and extend the provided Terraform examples. This flexible implementation avoids the constraints of relying on third-party-maintained Terraform modules:

loading...

With the reference architecture in place, you can proceed with the remaining steps in this documentation. Make sure you're in the correct directory before continuing with the instructions.

Terraform prerequisites

To manage Camunda 8 infrastructure on AWS using Terraform, you need to configure Terraform's backend to store the state file remotely in an S3 bucket. This provides secure, persistent state storage for your infrastructure.

Advanced users may choose to configure a different backend. The setup described here is a recommended starting point for new users.

Set up AWS authentication

The AWS Terraform provider is required to provision resources in AWS. Before using the provider, you must authenticate it with your AWS credentials.

Any user who creates AWS resources retains administrative access to them. For better control and security, it is recommended to create a dedicated AWS IAM user specifically for Terraform. This ensures the resources are properly managed and owned by a single identity.

You can customize the region and authentication settings as needed. Terraform supports multiple authentication methods, including:

- For development or testing, you can use the AWS CLI. If your AWS CLI is already configured, Terraform will automatically detect and use those credentials.

To configure the AWS CLI:

aws configure

Enter your AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, region, and output format. These can be retrieved from the AWS Console.

- For production environments, it is recommended to use a dedicated IAM user. Create access keys for that user via the AWS console, and export them as environment variables:

AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEY.

Create an S3 bucket for Terraform state management

Before initializing Terraform, you must create an S3 bucket to store the state file. This is essential for collaborative work and helps prevent issues such as state file corruption.

Begin by setting your preferred AWS region as an environment variable to avoid repeating it in every command:

export AWS_REGION=<your-region>

Replace <your-region> with your chosen AWS region (e.g., eu-central-1).

Next, follow these steps to create an S3 bucket with versioning enabled:

-

Open your terminal and ensure that the AWS CLI is installed and properly configured.

-

Run the following command to create an S3 bucket for storing your Terraform state. Be sure to choose a unique bucket name, and ensure that the

AWS_REGIONenvironment variable is already set:aws/common/procedure/s3-bucket/s3-bucket-creation.shloading... -

Enable versioning on the S3 bucket to track changes and protect the state file from accidental deletions or overwrites:

aws/common/procedure/s3-bucket/s3-bucket-versioning.shloading... -

Secure the bucket by blocking public access:

aws/common/procedure/s3-bucket/s3-bucket-private.shloading... -

Verify versioning is enabled on the bucket:

aws/common/procedure/s3-bucket/s3-bucket-verify.shloading...

The S3 bucket is now ready to securely store your Terraform state files, with versioning enabled for added protection.

Initialize Terraform

Once authentication is configured, you can initialize your Terraform project. Earlier, you created a dedicated S3 bucket (S3_TF_BUCKET_NAME) for storing the state file. In this step, Terraform will use that bucket along with a specific key to manage your infrastructure state.

Initialize the backend and download the required provider plugins:

Make sure you are in the terraform subfolder: camunda-deployment-references/aws/compute/ec2-single-region/terraform.

loading...

Terraform will now use the S3 bucket to manage the state file, ensuring remote and persistent storage.

EC2 setup

The ec2.tf file handles the creation of compute instances and, optionally, a bastion host. Within this file, you can configure disk size, instance type, enable or disable the bastion host, and define the Amazon Machine Image (AMI) to override the default behavior of using the latest available image.

The file defines all resources related to the EC2 setup and can be customized as needed in your copied reference architecture. Note that the embedded code snippet below is limited to 30 lines. For the complete file, see the link provided at the bottom of the snippet:

loading...

Security setup

The security.tf file defines several security groups to manage access and traffic flow for different use cases, including:

- Allowing internal VPC traffic on Camunda ports

- Permitting EC2 instances to access external traffic on ports 80 and 443 to download dependencies (e.g., Java, Camunda)

- Allowing inbound traffic to the Load Balancer on specific ports

- Enabling SSH access for the bastion host

In addition to traffic management, this file also includes:

- A KMS key for encrypting EC2 disks and the OpenSearch domain

- An SSH key pair used to authorize remote SSH connections

The embedded snippet below shows which resources are created and how they can be customized in your copied reference. The preview is limited to 30 lines. For the complete file, refer to the link at the bottom of the snippet:

loading...

Load balancer setup

The lb.tf file defines the load balancer configuration used to expose Camunda 8 either publicly or within your internal network. You can further restrict access based on your security requirements.

The configuration includes two types of load balancers:

- A Network Load Balancer to expose the gRPC endpoint

- An Application Load Balancer to expose the Camunda WebApps and REST API

The embedded snippet below shows the resources defined in this file and how they can be customized in your copied reference. The preview is limited to 30 lines. For the complete file, refer to the link at the bottom of the snippet:

loading...

OpenSearch module setup

If you do not want to use this module, you can skip this section. However, you will need to adjust the remaining steps to remove any references to it.

If you choose not to use this module, you must provide your own Elasticsearch or OpenSearch service.

Additionally, be sure to delete the opensearch.tf file in your reference copy—otherwise, the resources defined in it will still be created.

The OpenSearch module provisions an OpenSearch domain for use with the Camunda platform. OpenSearch is a powerful alternative to Elasticsearch.

Using Amazon OpenSearch Service requires setting up a new Camunda installation. Migration from earlier Camunda versions using Elasticsearch is not currently supported. Switching between Elasticsearch and OpenSearch in either direction is also unsupported.

Set up the OpenSearch domain module

-

The

opensearch.tffile in your reference contains a basic OpenSearch setup using a local Terraform module. The snippet below shows the structure of this file, which you can modify within your cloned setup to suit your needs.Network-based securityThe default OpenSearch deployment relies primarily on network-level security. While this simplifies access, it can expose sensitive data within your VPC.

To enhance security, consider enabling fine-grained access control.

aws/compute/ec2-single-region/terraform/cluster/opensearch.tfloading... -

Customize the cluster setup using various input options. For a complete list of available parameters, refer to the OpenSearch module documentation.

The instance type m7i.large.search used in the example is only a suggestion. You can change it based on your workload and requirements.

Define outputs

Terraform allows you to define outputs—useful for retrieving important values generated during execution, such as database endpoints and other configuration details.

Each Terraform definition in the reference includes output blocks at the end of the file. You can modify these as needed. The provided defaults are ready to use in automation scripts.

Defining outputs helps you easily reference resources in later steps or scripts, simplifying your deployment workflow.

Execution

We strongly recommend managing sensitive information using a secure secrets management tool such as HashiCorp Vault. For guidance on injecting secrets into Terraform via Vault, refer to the Terraform Vault Secrets Injection Guide.

-

Open a terminal in the reference directory containing

config.tfand the other.tffiles. -

Perform a final initialization to apply any changes made throughout this guide:

aws/common/procedure/s3-bucket/s3-bucket-tf-init.shloading... -

Plan the configuration files:

terraform plan -out cluster.plan # describe what will be created -

After reviewing the plan, you can confirm and apply the changes:

terraform apply cluster.plan # apply the creation

Terraform will now provision the Amazon EC2 resources and the OpenSearch domain with all necessary configurations. This process may take approximately 20–30 minutes to complete.

Connect to remote machines via Bastion host (optional)

Since the EC2 instances are not publicly accessible, you must connect to them through a Bastion host. Alternatively, you can use the AWS VPN Client to securely access the private VPC.

This guide does not cover the VPN client setup, as it requires specific manual configuration and user interaction:

export BASTION_HOST=$(terraform output -raw bastion_ip)

# retrieves the first IP from the camunda_ips array

export CAMUNDA_IP=$(tf output -json camunda_ips | jq -r '.[0]')

ssh -J admin@${BASTION_HOST} admin@${CAMUNDA_IP}

2. Deploy Camunda 8

Configure and run the installation procedure

- Navigate to the procedure directory:

cd camunda-deployment-references-main/aws/ec2/procedure

The procedure directory contains Bash scripts for installing and configuring Camunda 8.

-

Configure script behavior using the following environment variables:

CLOUDWATCH_ENABLED: Defaults tofalse. Set totrueto install the CloudWatch agent on each EC2 instance and export Camunda logs and Prometheus metrics to AWS CloudWatch.

-

Override default versions in the

camunda-install.shscript by modifying these variables:OPENJDK_VERSION: The Temurin Java version to install.CAMUNDA_VERSION: The Camunda 8 version to install.CAMUNDA_CONNECTORS_VERSION: The Camunda 8 Connectors version to install.

noteThese variables must be set inside the

camunda-install.shscript itself; they cannot be set as environment variables. -

Run the

all-in-one-install.shscript.

This script installs all required dependencies and configures Camunda 8 to run in a highly available setup using a managed OpenSearch instance.

It automatically retrieves all required IP addresses and other details from the Terraform state via Terraform outputs.

During the initial run, you will be prompted to confirm SSH connections to each EC2 instance by typing yes.

Connect and use Camunda 8

The Application Load Balancer (ALB) and Network Load Balancer (NLB) endpoints are available via Terraform outputs:

-

terraform output alb_endpoint: Use this to access Operate and other Web UIs (such as Tasklist, Optimize, and Connectors). The ALB handles HTTP traffic for these interfaces, as well as the Orchestration Cluster REST API (e.g., connectors on port9090). -

terraform output nlb_endpoint: Use this to access the Zeebe Gateway’s gRPC endpoint. The NLB is designed for TCP-based gRPC traffic, while the ALB handles HTTP.

These endpoints use AWS-assigned public hostnames. To use your own domain, create CNAME records pointing to these hostnames or use Route 53 for DNS management and to enable SSL certificates. Note that enabling SSL and custom domains will require additional configuration in the Terraform blueprint, since it listens on HTTP by default.

If you prefer not to expose your environment publicly, you can use the Bastion host (jump host) to access services locally via port forwarding.

For a more secure, enterprise-grade solution, use the AWS Client VPN to access the private subnet within your VPC. This setup requires additional certificates and configuration, detailed in the AWS getting started tutorial.

The following commands can be run from within the Terraform folder to bind remote ports to your local machine via SSH port forwarding:

export BASTION_HOST=$(terraform output -raw bastion_ip)

# retrieves the first IP from the camunda_ips array

export CAMUNDA_IP=$(tf output -json camunda_ips | jq -r '.[0]')

# 26500 - gRPC; 8080 - WebUI; 9090 - Connectors

ssh -L 26500:${CAMUNDA_IP}:26500 -L 8080:${CAMUNDA_IP}:8080 -L 9090:${CAMUNDA_IP}:9090 admin@${BASTION_HOST}

Turn off bastion host (optional)

If you used the bastion host for access, it can be turned off when longer needed for direct access to the EC2 instances.

To turn off the bastion host, set the enable_jump_host variable to false in the variables.tf file, and reapply Terraform.

3. Verify connectivity to Camunda 8

Using Terraform, you can obtain the HTTP endpoint of the Application Load Balancer and interact with Camunda through the Orchestration Cluster REST API.

- Navigate to the Terraform folder:

cd camunda-deployment-references-main/aws/ec2/terraform

- Retrieve the Application Load Balancer output:

terraform output -raw alb_endpoint

- Use the Orchestration Cluster REST API to communicate with Camunda:

Follow the example in the Orchestration Cluster REST API documentation to authenticate and retrieve the cluster topology.

Manage Camunda 8

Upgrade Camunda 8

Upgrading directly from Camunda 8.6 to 8.7 is not supported and cannot be performed.

To update to a new patch release, follow this recommended approach:

- Remove the

libfolder. This ensures outdated dependencies from previous versions are fully removed. - Overwrite the remaining files with those from the downloaded patch release package.

- Restart Camunda 8.

You can automate this process using the all-in-one-install.sh script, which:

- Detects an existing Camunda 8 installation.

- Deletes the

libfolder to clear outdated dependencies. - Overwrites files with the updated version.

- Regenerates configuration files.

- Restarts the application to apply updates.

Monitoring

Camunda exposes metrics in Prometheus format by default. For details on scraping Camunda 8 metrics, see metrics.

In AWS environments, you can leverage CloudWatch for log collection and for gathering Prometheus metrics. While Camunda natively integrates with Prometheus and Grafana, using CloudWatch for metrics visualization requires additional configuration.

Backups

Refer to the general backup and restore documentation in backup and restore.

When using AWS, you can utilize S3 for backing up both Zeebe and Elasticsearch / OpenSearch data.

Troubleshooting

For troubleshooting assistance, consult the operational guides troubleshooting documentation.