AI Agent Tool Definitions

When resolving the available tools within an ad-hoc sub-process, the AI Agent will take all activities into account which have no incoming flows (root nodes within the ad-hoc sub-process) and are not boundary events.

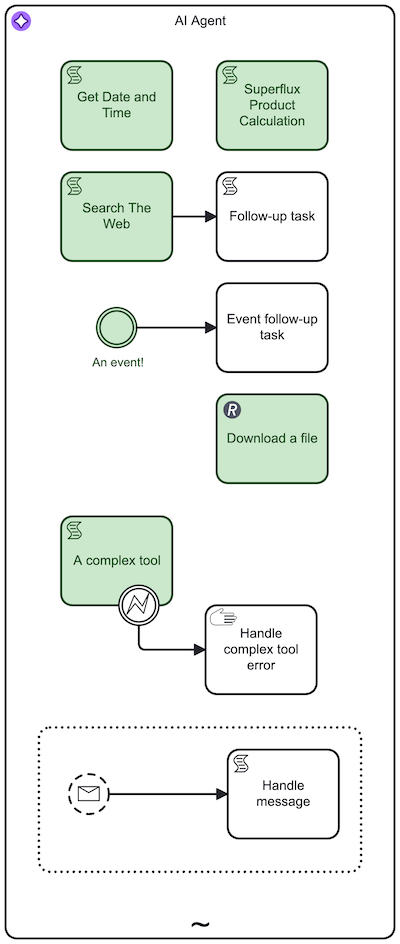

For example, in the following image the activities marked in green are the ones that will be considered as tools:

You can use any BPMN elements and connectors as tools and to model sub-flows within the ad-hoc sub-process.

Tool resolution

To resolve available tools, the AI Agent connector either resolves the tools by reling on data provided by the Zeebe engine or reads the BPMN model directly. The approach depends on the chosen AI Agent implementation:

- AI Agent Sub-process

- AI Agent Task

When using the AI Agent Sub-process implementation, the connector relies on data provided by the ad-hoc sub-process implementation to resolve the tools.

When using the AI Agent Task implementation, the connector reads the BPMN model directly to resolve the tools:

- It reads the BPMN model and looks up the ad-hoc sub-process using the configured ID. If not found, the connector throws an error.

- Iterates over all activities within the ad-hoc sub-process and checks that they are root nodes (no incoming flows) and not boundary events.

- For each activity found, analyzes the input mappings and looks for the

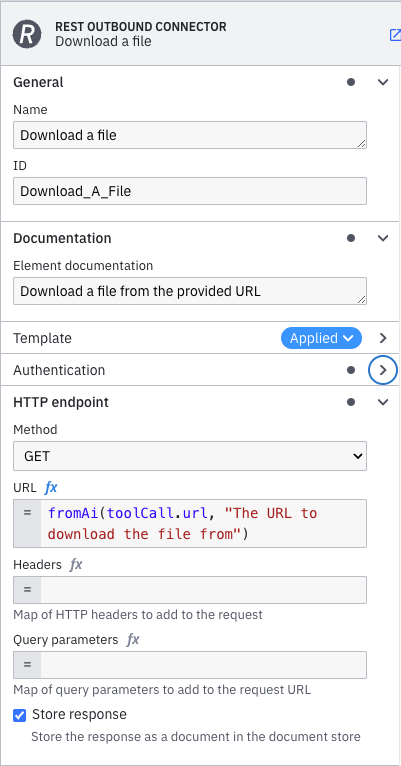

fromAifunction calls that define the parameters that need to be provided by the LLM. - Creates a tool definition for each activity found, and passes these tool definitions to the LLM as part of the prompt.

Tool definitions

The AI Agent connector only considers the root node of the sub-flow when resolving a tool definition.

A tool definition consists of the following properties which will be passed to the LLM. The tool definition is closely modeled after the list tools response as defined in the Model Context Protocol (MCP).

| Property | Description |

|---|---|

| name | The name of the tool. This is the ID of the activity in the ad-hoc sub-process. |

| description | The description of the tool, used to inform the LLM of the tool purpose. If the documentation of the activity is set, this is used as the description, otherwise the name of the activity is used. Make sure you provide a meaningful description to help the LLM understand the purpose of the tool. |

| inputSchema | The input schema of the tool, describing the input parameters of the tool. The connector will analyze all input mappings of the activity and create a JSON Schema based on the fromAi function calls defined in these mappings. If no fromAi function calls are found, an empty JSON Schema object is returned. |

Provide as much context and guidance in tool definitions and input parameter definitions as you can to ensure the LLM selects the right tool and generates proper input values.

Refer to the Anthropic documentation for tool definition best practices.

AI-generated parameters via fromAi

Within an activity, you can define parameters which should be AI-generated by tagging them with the

fromAi FEEL function in input mappings.

The function itself does not implement any logic (it simply returns the first argument it receives), but provides a way

to configure all the necessary metadata (for example, description, type) to generate an input schema definition. The tools

schema resolution will collect all fromAi definitions within an activity and combine them into an input schema for

the activity.

The first argument passed to the fromAi function must be a reference to a field within the toolCall context which will automatically be populated by the AI Agent connector. Example: toolCall.myParameter.

By using the fromAi tool call as a wrapper function around the actual value, the connector can both describe the parameter for the LLM by generating a JSON Schema from the function calls and at the same time utilize the LLM-generated value as it can do with any other process variable.

You can use the fromAi function in:

- Input mappings (for example, service task, script task, user task).

- Custom input fields provided by an element template if an element template is applied to the activity as technically these are handled as input mappings.

For example, the following image shows an example of fromAi function usage on a REST outbound connector:

fromAi examples

The fromAi FEEL function

can be called with a varying number of parameters to define simple or complex inputs. The simplest form is to just pass

a value.

fromAi(toolCall.url)

This makes the LLM aware that it needs to provide a value for the url parameter. As the first value to fromAi

needs to be a variable reference, the last segment of the reference is used as parameter name (url in this case).

To make an LLM understand the purpose of the input, you can add a description:

fromAi(toolCall.url, "Fetches the contents of a given URL. Only accepts valid RFC 3986/RFC 7230 HTTP(s) URLs.")

To define the type of the input, you can add a type (if no type is given, it will default to string):

fromAi(toolCall.firstNumber, "The first number.", "number")

fromAi(toolCall.shouldCalculate, "Defines if the calculation should be executed.", "boolean")

For more complex type definitions, the fourth parameter of the function allows you to specify a JSON Schema from a FEEL context. Note that support for complex JSON Schema features may be limited by the selected provider/model. For a list of examples, refer to the JSON Schema documentation.

fromAi(

toolCall.myComplexObject,

"A complex object",

"string",

{ enum: ["first", "second"] }

)

To mark a parameter as optional, you can use the options parameter:

fromAi(

toolCall.optionalParameter,

"An optional parameter",

"string",

null,

{ required: false }

)

Or using named parameters:

fromAi(

value: toolCall.optionalParameter,

description: "An optional parameter",

options: { required: false }

)

You can combine multiple parameters within the same FEEL expression, for example:

fromAi(toolCall.firstNumber, "The first number.", "number") + fromAi(toolCall.secondNumber, "The second number.", "number")

For more examples, refer to the fromAi documentation.

Tool call responses

To collect the output of the called tool and pass it back to the agent, the task within the ad-hoc sub-process needs to

set its output to a predefined variable name. For the AI Agent Sub-process implementation, this variable is predefined as

toolCallResult. For the AI Agent Task implementation, the variable depends on the configuration of the multi-instance execution,

but is also typically named toolCallResult.

Depending on the used task, setting the variable content can be achieved in multiple ways:

- A result variable or

a result expression containing a

toolCallResultkey - An output mapping creating the

toolCallResultvariable or adding to a part of thetoolCallResultvariable (for example, an output mapping could be set totoolCallResult.statusCode) - A script task that sets the

toolCallResultvariable

Tool call results can be either primitive values (for example, a string) or complex ones, such as a FEEL context that is serialized to a JSON string before passing it to the LLM.

As most LLMs expect some form of response to a tool call, the AI Agent will return a constant string indicating that the tool

was executed successfully without returning a result to the LLM if the toolCallResult variable is not set or empty after executing

the tool.

Document support

Similar to the user prompt Documents field, tool call responses can contain Camunda Document references within arbitrary structures (supporting the same file types as for the user prompt).

When serializing the tool call response to JSON, document references are transformed into a content block containing the plain text or base64 encoded document content, before being passed to the LLM.

Gateway tool definitions

Gateway tools are activities that expose multiple tools from an external source, such as an MCP server or an A2A agent. Unlike static tool definitions, gateway tools discover their available tools dynamically during agent initialization by calling the external source.

To configure an activity as a gateway tool, set the extension property io.camunda.agenticai.gateway.type on the activity. The property value specifies which gateway implementation to use (for example, mcpClient). The agent must also have access to a handler for the specified gateway type. Custom implementations can be made available to the agent in self-managed or hybrid setups.

For more details, see the available gateway tool implementations: