Azure OpenAI connector

The Azure OpenAI connector is an outbound connector that allows you to interact with Azure OpenAI models from your BPMN processes.

The Azure OpenAI connector currently supports only prompt operations:

completions,

chat completions, and

completions extensions.

Refer the official models documentation to find out if a desired model supports the operations mentioned.

Prerequisites

To begin using the Azure OpenAI connector, ensure you have created and deployed an Azure OpenAI resource. A valid Azure OpenAI API key is also required.

Learn more at the official Azure OpenAI portal entry.

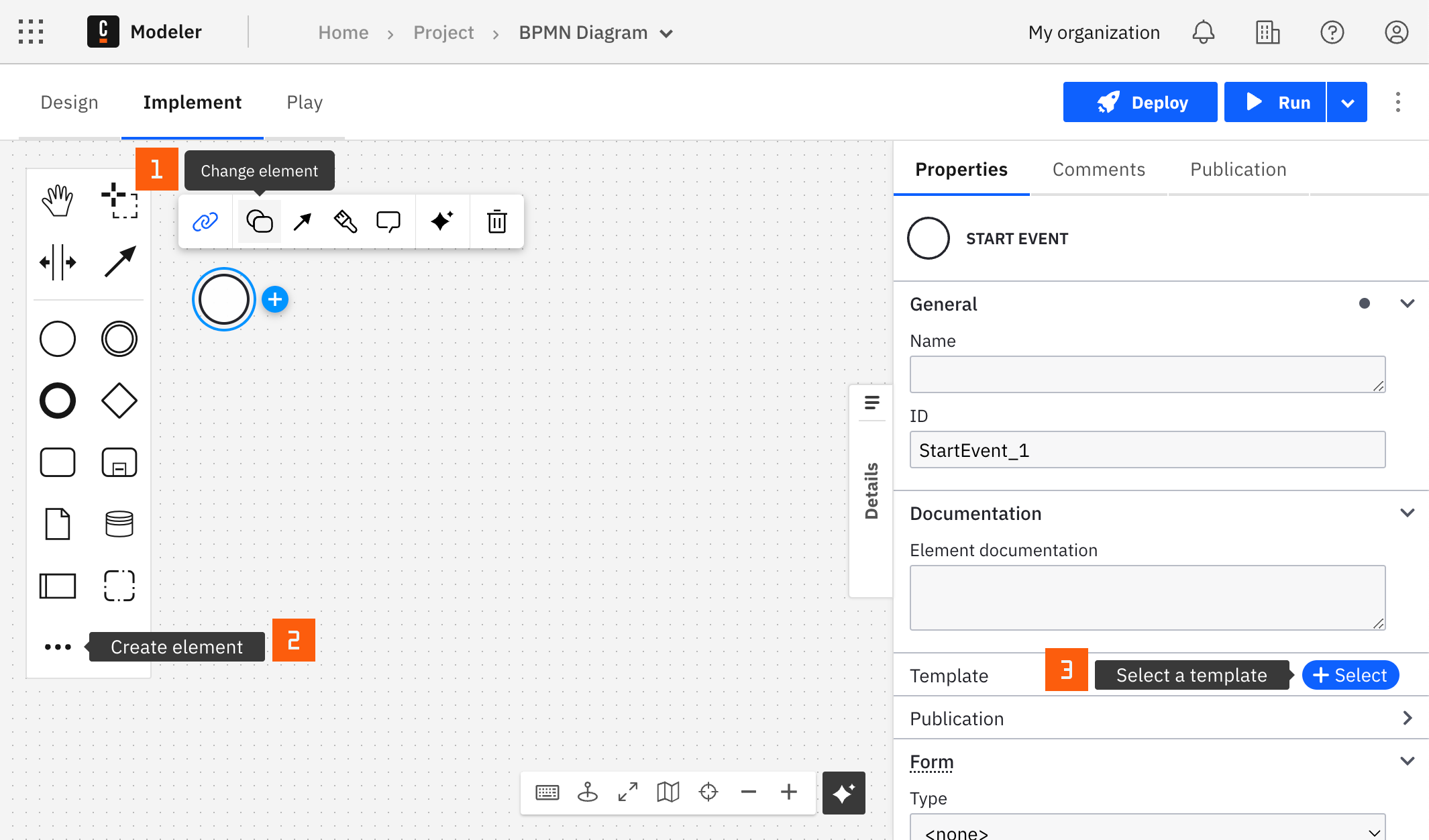

Create an Azure OpenAI connector task

You can apply a connector to a task or event via the append menu. For example:

- From the canvas: Select an element and click the Change element icon to change an existing element, or use the append feature to add a new element to the diagram.

- From the properties panel: Navigate to the Template section and click Select.

- From the side palette: Click the Create element icon.

After you have applied a connector to your element, follow the configuration steps or see using connectors to learn more.

Make your Azure OpenAI connector executable

To work with the Azure OpenAI connector, fill all mandatory fields.

Authentication

Fill the API key field with a valid Azure OpenAI API key. Learn more about obtaining a key.

Create a new connector secret

Keep your API key safe and avoid exposing it in the BPMN xml file by creating a secret:

- Follow our guide for creating secrets.

- Name your secret (for example,

AZURE_OAI_SECRET) so you can reference it later in the connector.

Operation

Select the desired operation from the Operation dropdown. Fill in the Resource name, the Deployment ID, and the API version related to your operation. Ensure the deployed model supports the selected operation.

Completion, chat completion, and completion extension

- For completion details, refer to the related Microsoft reference documentation.

- For chat completion details, refer to the related Microsoft reference documentation.

- For completion extension details, refer to the related Microsoft reference documentation.

Handle connector response

The Azure OpenAI connector is a protocol connector, meaning it is built on top of the HTTP REST connector. Therefore, handling response is still applicable as described.

Usage example

Chat completions

Assume you have deployed a gpt-35-turbo model with the following URL:

https://myresource.openai.azure.com/openai/deployments/mydeployment/completions?api-version=2024-02-01, and created a

Connector secret with the name AZURE_OAI_SECRET.

Consider the following input:

- API key:

{{secrets.AZURE_OAI_SECRET}} - Operation:

Chat completion - Resource name:

myresource - Deployment ID:

mydeployment - API version:

2024-02-01 - Message role:

User - Message content:

What is the age of the Universe? - Message context:

=[{"role": "system", "content": "You are helpful assistant."}] - Leave the rest of the params blank or default

- Result variable:

myOpenAIResponse

In the myOpenAIResponse you will find the following result:

{

"status": 200,

"headers": {

...

},

"body": {

"choices": [

{

"content_filter_results": {

"hate": {

"filtered": false,

"severity": "safe"

},

"self_harm": {

"filtered": false,

"severity": "safe"

},

"sexual": {

"filtered": false,

"severity": "safe"

},

"violence": {

"filtered": false,

"severity": "safe"

}

},

"finish_reason": "stop",

"index": 0,

"message": {

"content": "The age of the universe is estimated to be around 13.8 billion years. This age is determined through various scientific methods, such as measuring the cosmic microwave background radiation and studying the expansion rate of the universe.",

"role": "assistant"

}

}

],

"created": "...",

"id": "...",

"model": "gpt-35-turbo",

"object": "chat.completion",

"prompt_filter_results": [

{

"prompt_index": 0,

"content_filter_results": {

"hate": {

"filtered": false,

"severity": "safe"

},

"self_harm": {

"filtered": false,

"severity": "safe"

},

"sexual": {

"filtered": false,

"severity": "safe"

},

"violence": {

"filtered": false,

"severity": "safe"

}

}

}

],

"usage": {

"completion_tokens": 43,

"prompt_tokens": 24,

"total_tokens": 67

}

}

}